Borrowing from Wikipedia, the term DevOps is defined as…

DevOps (a clipped compound of "development" and "operations") is a culture, movement or practice that emphasizes the collaboration and communication of both software developers and other information-technology (IT) professionals while automating the process of software delivery and infrastructure changes. It aims at establishing a culture and environment where building, testing, and releasing software, can happen rapidly, frequently, and more reliably.

Now, I hate buzzwords as much as the next BTOM (a.k.a. bitter twisted old man)…

…but the idea behind DevOps, of building, testing, and releasing software more rapidly and reliably is simply amazing and utterly necessary.

As system complexity has increased, as application functionality has ballooned, and as the cost of production downtime has skyrocketed, writing and testing code leaves one a long way from the promised land of published and deployed production code.

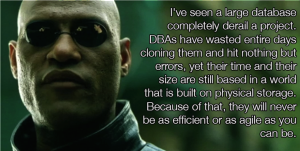

As explained by DevOps visionaries like Gene Kim, the biggest barrier to that promised land is data, in the form of databases cloned from production for development and testing, in the form of application stacks cloned from production systems for development and testing.

The amount of time wasted waiting for data on which to develop or test dwarfs the amount of time spent developing or testing. Consequently, IT has learned to be satisfied with only occasional refreshes of dev/test systems from production, resulting in humorously inadequate dev/test systems, and that has been the norm.

There is a new norm in town.

Data virtualization, like server virtualization, breaks through the constraint. Over the past 10 years, IT has learned to wallow in the freedom of server virtualization, using tools like VMware and OpenStack to provision virtual machines for any purpose.

Unfortunately, data and storage did not benefit from virtua lization as well. This has resulted in a white-hot nova in the storage industry, and while that is good news for the storage industry, it still means that IT has cloned from production to non-production the same way it has done the past 40 years, in other words slowly, expensively, and painfully.

lization as well. This has resulted in a white-hot nova in the storage industry, and while that is good news for the storage industry, it still means that IT has cloned from production to non-production the same way it has done the past 40 years, in other words slowly, expensively, and painfully.

And we have continued to do it the old way, slowly, expensively, and painfully, because we didn’t know any better.

And we have continued to do it the old way, slowly, expensively, and painfully, because we didn’t know any better.

The IT industry couldn’t see any better way to do clone from production to non-production. Slow and painful was the norm.

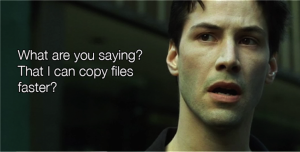

But once one realizes the nature of making copies, and how modern file-system technology can share at the block-level, compress, an d de-duplicate, suddenly making copies of databases and file-system directories becomes easier and inexpensive.

d de-duplicate, suddenly making copies of databases and file-system directories becomes easier and inexpensive.

Here is a thought-provoking question: why doesn’t every individual developer and tester have their own private full systems stack? Why can’t they have several of them, one or more for each task on which they’re working?

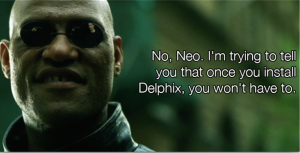

I can literally hear all of the other BTOM’s scoffing at that question: “Nobody has that much infrastructure, you idiot!”

And that is the point. You certainly do.

You just don’t have the right infrastructure.

This was presented at the Collaborate 2016 conference in Las Vegas on Monday 11-April 2016.

You can download the slidedeck here.